If you’ve watched high-def videos of Gratuitous Space Battles 2, or been lucky enough to try it at EGX, then you may have noticed all that gratuitous GUI fluff that animates and tweaks and flickers all over the place, because… frankly, I like that kinda nonsense, and it’s called GRATUITOUS, so I can get away with it. This sort of stuff…

Anyway…I love it,and it’s cool, but until today it was coded really badly. I had a bunch of helper functions to create these widgets, and I sprinkled them all over the GUI for fun. For example there was a function to add an animated horizontal bar that goes up and down. Wahey. Also there are functions to add random text boxes of stuff. The big problem is that each one was an isolated little widget that did it’s own thing. In the case of a simple progress bar widget, it would have a rectangle, and a flat-textured shaded box inside it that would animate. That meant 2 draw calls, one for the outline of the box (using a linelist) and the other was a 2-triangle trainglestrip which was the box inside it. That was 2 draw calls for a single animated progress bar thing, and a single GUI window might have 6 or even 20 widgets like that… so suddenly just adding a dialog box means an additional 40 draw calls.

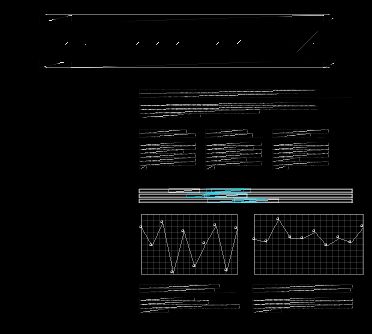

Normally that doesn’t matter because a) 40 draw calls isn’t a lot, and b) graphics cards can handle it. However, it’s come to my attention that on some Intel integrated cards, which are actually surprisingly good at fill rate and general poly-drawing, too many draw calls really pisses them off, performance wise. Plus… 40 draw calls isn’t a lot, if thats your ‘thing’, but if there are 40 on the minimap, 40 on each score indicator, 40 on the comms readout, 40 on each of 3 ship inspector windows, then suddenly you have several hundred draw calls of GUI fluff, before you do the actual real GUI, let alone the big super-complex silly space battle, and yup…I’ve seen 4,000 draw calls in a frame. Ooops. To illustrate this, here is that top bunch of widgets in wireframe.

That’s a lot of stuff being drawn just for fluff, so to ease the burden on lesser cards, I should be batching it all, and now I am. That used to be about ten trillion draw calls and now its about five. I have a new class which acts as a collection of all the widgets on a certain Z-level, and it goes through drawing each ‘type’ of them as it’s own list. Nothing actually draws itself any more, it just copies it’s verts to the global vertex buffer, and then when I need to, I actually do a DrawIndexedPrimitiveVB() call with all of them in one go.

Ironically, this all involves MORE verts than before, because whereas drawing a 12 pixel rectangle with a line list involves 4 verts, drawing it as a trianglelist uses loads more, but I’m betting (and it’s a very educated bet) that adding the odd dozen verts is totally and utterly offset by doing far, far fewer draw calls.

This is how I spend Sunday Afternoons when it’s too cold for archery…